Generative AI Meets Branded Content: A Field Test by RiTE Media

Paris Schulman, CEO Rite Media

Executive Summary

Generative AI is exploding—and much of it remains speculative. In advertising, the stakes are higher than ever: more formats, faster timelines, and rising demands for content that scales without losing brand integrity. With so much hype surrounding AI’s creative potential, RiTE Media set out to test what this technology can actually deliver in a commercial context.

From late 2023 through early 2025, we conducted a real-world test of AI’s capabilities in producing branded content. Our goal was to determine whether a custom-trained LoRA (Low-Rank Adaptation) model could match or enhance traditional production methods—while maintaining legal and brand safety standards. The result: while 80% of AI-generated outputs fell short, 20% showed tangible, scalable value. This white paper outlines our methods, findings, tools, and takeaways for agencies, brands, filmmakers, and technologists.

Objectives

– Test whether LoRA-trained diffusion models can emulate a traditionally photographed food commercial.

– Explore AI’s capacity to generate high-volume deliverables from a minimal base of owned assets.

– Evaluate the cost, quality, and control trade-offs between traditional and AI-assisted workflows.

– Assess legal, ethical, and IP compliance standards around custom-trained models.

– Highlight the emerging convergence of AI with cinematographic motion and framing control.

Methodology & Workflow

Phase 1 – Traditional Production

– Shot a tabletop burger commercial using practical food styling, lighting, and photography.

– Captured dozens of high-resolution stills across multiple angles and lighting conditions.

– These stills served as the foundation for AI training.

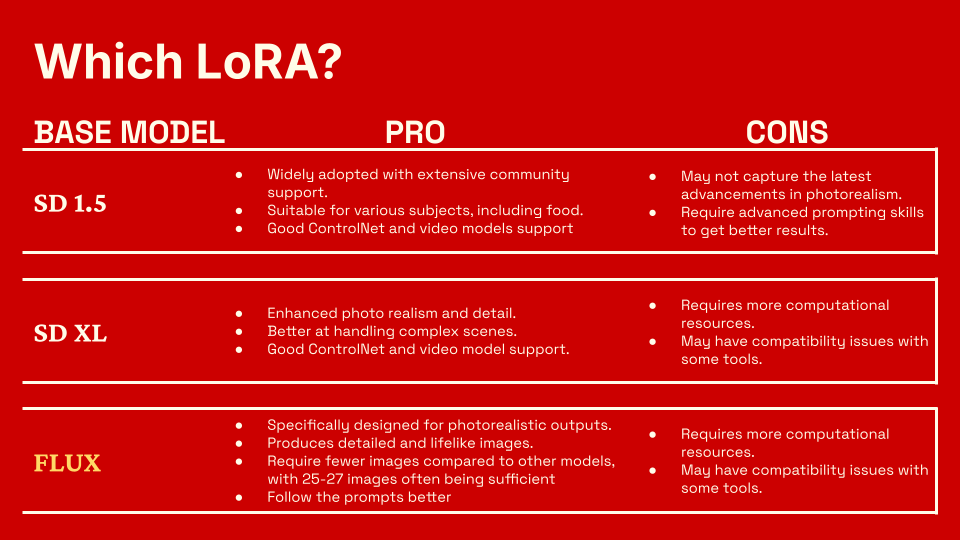

– FLUX → excels at photorealism, needs fewer images, but is resource-heavy.

– SD 1.5 → widely adopted, good community support, but less photorealistic.

– SD XL → better realism, handles complex scenes, but needs more resources.

Phase 2 – Model Training & Generation

– Trained a custom LoRA model using exclusively client-owned assets (no internet-sourced imagery).

– Collaborated with Undercurrent AI, an advanced AI research and consulting partner, to guide technical implementation.

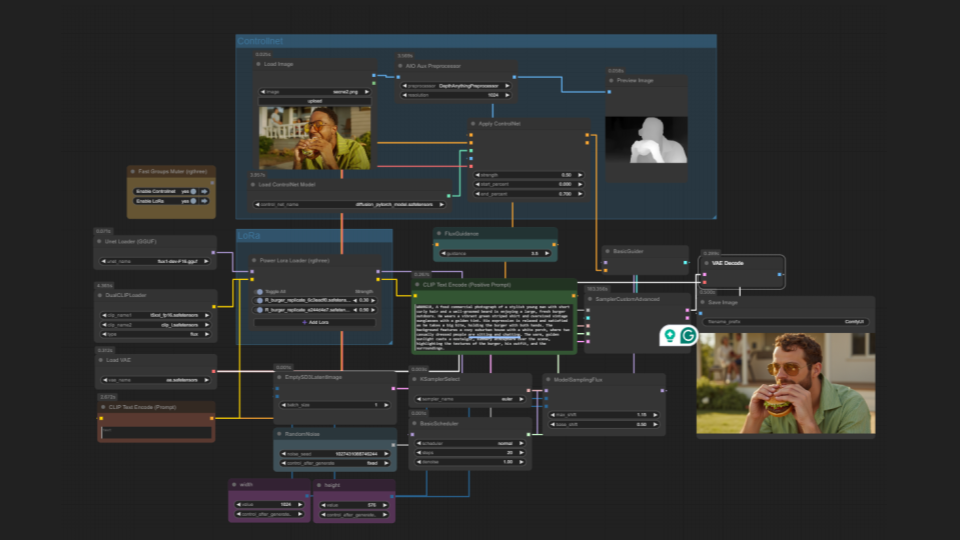

– Employed a variety of tools including ControlNet, IP Adapter, Depth-to-Image, and ComfyUI to enhance output fidelity and prompt control.

– Generated multiple iterations per angle, lighting setup, and background—resulting in over 500 images across four different models.

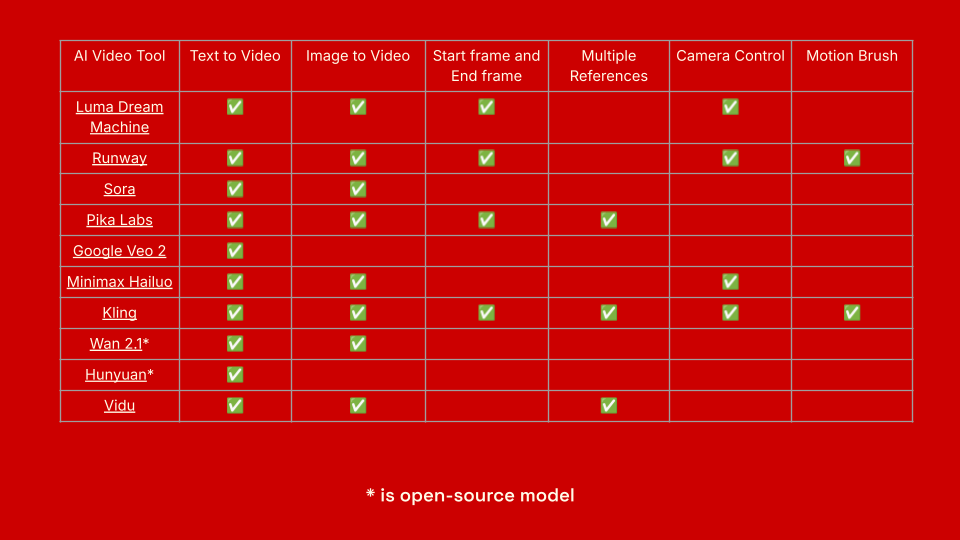

Phase 3 – Motion Simulation

– Captured camera motion using an iPhone and motion tracking software such as SynthEyes.

– Applied this data to simulate camera movement in the AI generation pipeline.

– This approach is nearing a phase of practical implementation for real-world commercial use, especially in high-efficiency or R&D-forward productions.

Legal Review

– All assets used for training were owned or created in-house.

– The workflow was developed in tandem with our legal counsel to ensure compliance with current IP and AI regulations.

– No third-party datasets or scraped internet content were used.

Key Findings

1. Image Quality & Realism

– AI-generated imagery was often passable at first glance but degraded under scrutiny (e.g. textures, light falloff, food physics).

– AI struggled with realism in areas like melted cheese, steam, or sauce dynamics.

2. Efficiency & Scalability

– LoRA training was completed in a matter of hours.

– Generated assets in 9:16, 1:1, and 16:9 without requiring reshoots.

– Creative teams could test new looks, compositions, and variants rapidly.

3. Cost Implications

– Significant reduction in cost for generating format-specific variations (especially social cutdowns).

– Traditional shoots still required for foundational product fidelity.

4. Motion & Cinematography

– Motion simulations based on tracked iPhone footage and SynthEyes tracking showed promise and are nearing practical viability.

– Lighting and motion consistency remain challenging for AI models but are improving rapidly.

5. Legal & Ethical Clarity

– By training on exclusively owned material, the workflow minimizes IP risk.

– Described by counsel as one of the safest current uses of generative AI in a commercial context.

Tools Used

– LoRA (Low-Rank Adaptation) for custom model training

– ControlNet for fine-tuned image control and pose structure

– IP Adapter for asset conditioning

– Depth-to-Image for lighting consistency and 3D approximation

– ComfyUI for node-based visual programming and model orchestration

– SynthEyes for camera motion tracking

– Undercurrent AI as consulting partner for technical R&D and AI ethics guidance

Recommendations for Creative Teams & Clients

– Use AI as a post-production multiplier—not a full replacement for craft.

– Train on your own assets to protect brand integrity and IP.

– Combine traditional production and AI generation for maximum control.

– Engage legal counsel early in pipeline design.

– Begin testing AI motion and framing control tools for use in R&D and early-stage creative visualization.

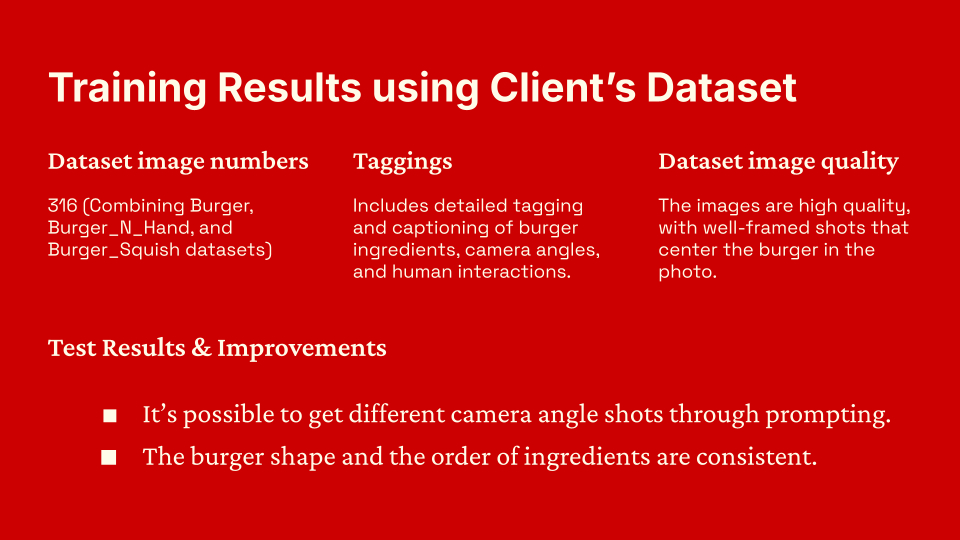

– 316 images across burger-focused sets.

– Detailed taggings for ingredients, angles, human interactions.

– High-quality, well-framed images.

– Multiple camera angles possible via prompting.

– Ingredient order and burger shape remained consistent.

The 80/20 Reality

Over 500 images were generated using four model variations. Each model took under 6 hours to train and test. Roughly 80% of the outputs were unusable without significant cleanup: distorted proportions, broken textures, or lighting mismatches. However, the remaining 20% delivered high-fidelity outputs that were:

– Consistent with brand look and feel

– Rendered in multiple aspect ratios

– Legally clean and copyright-safe

These assets proved valuable for supplemental deliverables, social content, and R&D previsualization.

This test shows that AI is not ready to replace human production—but it is ready to augment it in powerful, flexible ways.

Final Note

This test was conducted in March 2025, and the tools used are evolving at an exponential pace. Some techniques in this paper may become outdated in a matter of weeks. Our aim is not to present a fixed blueprint—but to document a moment-in-time experiment that opens doors to what’s next.

Special thanks to photographer Nate Dorn for capturing the process so vividly; our AI consultants at UndercurrentXR for their technical guidance; food stylist Vanessa Parker for elevating every detail; and the RiTE Media team—Kevin Christopher, Hoss Soudi, Nika Tabidze, Johnny Meyer, and Ryan Monolopolous—for bringing this vision to life.